Madrid, December 2022

Fast DDS is the open source DDS implementation that enables developers to create real-time communication systems with a wide set of features, quality documentation and, of course, an unbeatable performance.

To prove that, eProsima conducted a thorough performance testing, focusing on latency and throughput while comparing Fast DDS test results with those of other DDS open-source implementations such as Open DDS and Cyclone DDS.

Latency

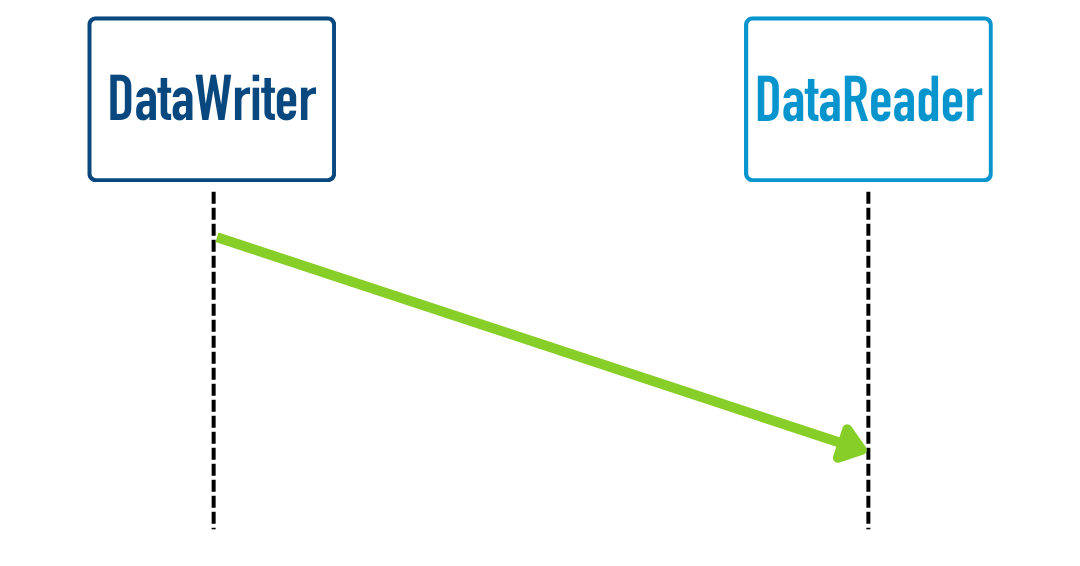

Latency can be defined as the amount of time that it takes for a message to traverse a system. More specifically, in the case of this DDS communication exchange benchmark performed with Apex.AI performance testing, latency could be defined as the time it takes for a DataWriter to serialize and send a data message plus the time it takes for a matching DataReader to receive and deserialize it.

NOTE: Due to its architecture, the neutral test framework used for a fair comparison adds latency to the results.

Latency Test Results

Intra-process

The following graphs show the Fast DDS 2.8.0, Cyclone DDS 0.9.0b1 and Open DDS 3.13.2 latency test results for intra-process delivery. Intra-process delivery is a DDS feature that accelerates the communications between entities inside the same process, averting any of the overhead involved in the transport layer.

In the figure 1.1a it can be seen that Fast DDS, both reliable and with the zero-copy mechanism show a better latency performance than the other DDS implementations, this difference becomes more evident as the payload increases, proving that Fast DDS is the more performance-stable implementation. Moreover, the Open DDS implementation crashes with Arrays 245 kB, 1 MB and 4 MB.

Fig 1.1a Fast DDS 2.8.0 intraprocess vs Cyclone DDS 0.9.0b1 intraprocess & Open DDS 3.13.2 intraprocess

Taking a closer look at this graph, the image 1.1b shows that only Cyclone DDS with loans has a better latency performance for the Array 16KB, for the rest of the arrays either Fast DDS reliable or Fast DDS zero-copy offer better performance results, with particular difference compared to Open DDS and Cyclone DDS with loans.

Fig 1.1b Fast DDS 2.8.0 intraprocess vs Cyclone DDS 0.9.0b1 intraprocess & Open DDS 3.13.2 intraprocess up to 64 KB

Inter-process

The performance test also shows the latency results for inter-process delivery, in which multiple processes communicate with each other, providing a shared memory based transport layer suitable for a real-time Publish-Subscribe DDS. In this specific case all inter-process tests are run with both processes in the same host machine.

The figure 1.2a shows that the Open DDS latency presents a big latency peak in comparison with all other implementations, before crashing for payloads over 1M.

Fig 1.2a Fast DDS 2.8.0 interprocess vs Cyclone DDS 0.9.0b1 interprocess & Open DDS 3.13.2 interprocess

The image 1.2b shows the latency between the Fast DDS and Cyclone DDS implementation inter-process, here it can be observed how both implementations of Fast DDS have a better average latency, and a more stable distribution, being the difference especially noticeable after the Array 64KB, where the Cyclone DDS implementation without loans’ latency peaks exponentially.

Fig 1.2b Fast DDS 2.8.0 interprocess vs Cyclone DDS 0.9.0b1 interprocess

Zooming into the lower payload arrays up to 64KB, the image 1.2c shows that only Open DDS has clear better latency result for 16KB, although its performance is also noticeably worse than its peers for the other arrays (1KB, 4KB and 64KB). For the other Arrays both the Fast DDS and Cyclone DDS show close results for the arrays 1KB and 4KB, being the Fast DDS inter-process implementations more stable than the rest the higher the payload.

Fig 1.2c Fast DDS 2.8.0 interprocess vs Cyclone DDS 0.9.0b1 interprocess & Open DDS 3.13.2 interprocess up to 64 KB

Throughput

In network computing, throughput is defined as a measurement of the amount of information that can be sent/received through the system per unit time, i.e. it is a measurement of how many bits traverse the system every second

With the Apex.AI test throughput is measured as the amount of bytes received by the DataReader (sent by the DataWriter at a specified rate) per a unit of time. This can be summarized in the formula: number of messages received multiplied by the message size, all divided by the time unit.

Throughput Test Results

Intra-process

The following graphs show the Fast DDS 2.8.0, Cyclone DDS 0.9.0b1 and Open DDS 3.13.2 throughput test results for intra-process delivery.

In the first graph 2.1a it can be observed that Fast DDS both reliable and zero-copy has the same throughput performance than Cyclone DDS for all the payloads in the test, while Open DDS in the same process crashes with Array 1MB and Array 4MB.

Fig 2.1a Fast DDS 2.8.0 intraprocess vs Cyclone DDS 0.9.0b1 intraprocess & Open DDS 3.13.2 intraprocess

Zooming into the smaller payloads of the image, it can be observed in the image 2.1b that all the DDS implementations show the same throughput for intra-process delivery. This equal throughput result shows that all DDS implementations are able to support the dataflow of the Apex.AI testing, which fails to push their throughput limits.

Fig 2.1b Fast DDS 2.8.0 intraprocess vs Cyclone DDS 0.9.0b1 intraprocess & Open DDS 3.13.2 intraprocess up to 64 KB

Inter-process

The following images show the Fast DDS 2.8.0, Cyclone DDS 0.9.0b1 and Open DDS 3.13.2 throughput test results for inter-process delivery. Similar to the results of the intra-process delivery, we can observe in graph 2.2a how Fast DDS and Cyclone DDS present the same results in throughput for all the given payloads. Open DDS crushes after Array 1MB, and a degradation of the throughput performance is visible after the Array 256KB.

Fig 2.2a Fast DDS 2.8.0 interprocess vs Cyclone DDS 0.9.0b1 interprocess & Open DDS 3.13.2 interprocess

Taking a closer look at the 64KB of payload, it can be seen that the throughput performance result for all the DDS implementations are the same.

Fig 2.2b Fast DDS 2.8.0 interprocess vs Cyclone DDS 0.9.0b1 interprocess & Open DDS 3.13.2 interprocess up to 64 KB

Conclusions

Fast DDS proves to be the most stable DDS implementation of all the tested implementations regarding latency, as both Fast DDS reliable and Fast DDS zero-copy present a better latency than Cyclone DDS and Open DDS for the majority of the payloads in both intra-process and inter-process. Moreover, the bigger the payload the bigger the difference between Fast DDS’ low latency and the other implementations, proving Fast DDS to be the most stable DDS implementation.

Testing Configuration

For the sake of clarity and transparency, below you can find the specifications of the test environment in which the performance experiments were performed. The test was conducted using Apex.ai tools with the following characteristics:

NOTE: Due to its architecture, the neutral test framework used for a fair comparison adds latency to the results.

Machine specifications:

- X86_64 with Linux 4.15.0 & Ubuntu 18.04.5

- 8 Intel(R) Xeon(R) CPU E3-1230 v6 @ 3.50GHz

- 32 GB RAM

Software specifications:

- Docker 20.10.7

- Apex performance tests latest (d69a0d72)

- Fast DDS v2.8.0

- Open DDS 3.13.2

- Cyclone DDS 0.9.0b1

Experiments configuration:

- Duration: 30 s

- 1 publisher and 1 subscriber

- Reliable, volatile, keep last 16 comms

- Publication rate of 100 Hz

- Both same and different processes

FOR MORE INFORMATION:

Please contact