Benchmarking DDS Implementations

Updated 21st December 2022

The existence of different DDS implementations motivates the execution of performance comparisons across them. In this article, eProsima presents a comparison between Fast DDS and Eclipse Cyclone DDS, comparing both latency and throughput performance.

In the majority of the tested cases, Fast DDS exposes a lower and more stable latency than Cyclone DDS. Continue reading for detailed information.

Benchmarking DDS Implementations - INDEX

This article is divided into the following sections:

- Latency Comparison

- Latency Results: Intra-process

- Latency Results: Inter-process

- Latency Results: Conclusion

- Methodology: Measuring Latency

- Latency Tests details

- Throughput Comparison

Latency Comparison

The latency tests have been executed in two different scenarios: intra-process and inter-process. In this specific case all inter-process tests are run with both processes in the same host machine.

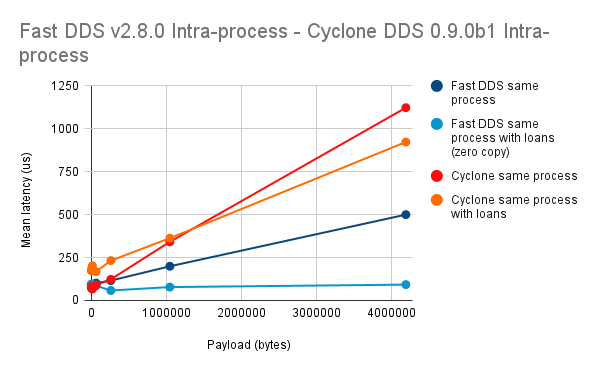

Latency Results: Intra-process

Latency Results: Inter-process

Latency Results: Conclusion

The comparison of Fast DDS and Cyclone DDS in terms of latency performance shows that Fast DDS’s mean latencies are lower than that of Cyclone DDS in the majority of scenarios, meaning that Fast DDS is the fastest message delivery implementation for most of the tested cases, and the performance improvement of Fast DDS is more noticeable as the payload increases. Furthermore, it is important to note that Fast DDS is also the most stable DDS implementation, indicated by a slower increase of latency as the payload gets larger.

Methodology: Measuring Latency

Latency is usually defined as the amount of time that it takes for a message to traverse a system. In packet-based networking, latency is usually measured either as one-way latency (the time from the source sending the packet to the destination receiving it), or as the round-trip delay time (the time from source to destination plus the time from the destination back to the source).

In the case of this DDS communication exchange benchmark performed with Apex.AI performance testing, latency could be defined as the time it takes for a DataWriter to serialize and send a data message plus the time it takes for a matching DataReader to receive and deserialize it.

NOTE: Due to its architecture, the neutral test framework used for a fair comparison adds latency to the results.

Latency Tests details

NOTE: Due to its architecture, the neutral test framework used for a fair comparison adds latency to the results.

Machine specifications:

- X86_64 with Linux 4.15.0 & Ubuntu 18.04.5

- 8 Intel(R) Xeon(R) CPU E3-1230 v6 @ 3.50GHz

- 32 GB RAM

Software specifications:

- Docker 20.10.7

- Apex performance tests latest (d69a0d72)

- Cyclone DDS 0.9.0b1

- Fast DDS v2.8.0

Experiments configuration:

- Duration: 30 s

- 1 publisher and 1 subscriber

- Reliable, volatile, keep last 16 comms

- Publication rate of 100 Hz

- Both same and different processes

DDS QoS

A quick overview of the DDS QoS configuration is provided in the following table. For further inquiries about the purpose of each of the parameters please refer to the Fast DDS documentation.

- Reliability: RELIABLE

- History kind: KEEP_LAST

- History depth:1

- Durability: VOLATILE

Back to Benchmarking Index

Throughput Comparison

As in the latency case, the throughput performance benchmark has been executed in two different scenarios: intra-process and inter-process.

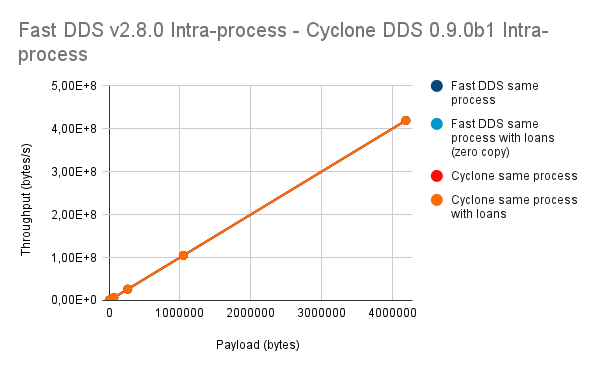

Throughput Results: Intra-process

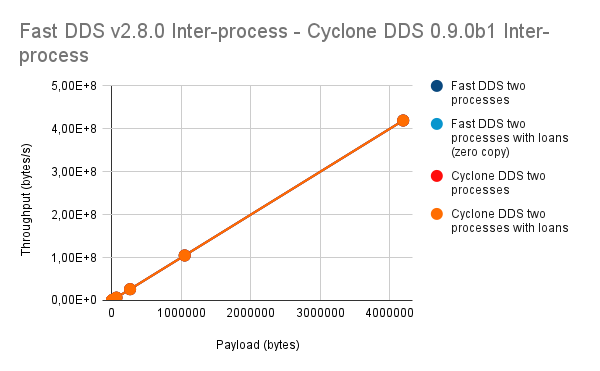

Throughput Results: Inter-process

Throughput Results: Conclusion

The intra and inter-process comparison shows no difference between Fast DDS and Cyclone DDS for any of the payloads considered. This equal throughput result for all DDS implementations is because all implementations are able to support the dataflow of the Apex.AI testing, which fails to push their throughput limits.

Methodology: Measuring Throughput

In network computing, throughput is defined as a measurement of the amount of information that can be sent/received through the system per unit time, i.e. it is a measurement of how many bits traverse the system every second

With the Apex.AI test throughput is measured as the amount of bytes received by the Data Reader (sent by the Data Writer at a specified rate) per a unit of time. This can be summarized in the formula: number of messages received multiplied by the message size, all divided by the time unit.

Throughput Tests details

Machine specifications:

- X86_64 with Linux 4.15.0 & Ubuntu 18.04.5

- 8 Intel(R) Xeon(R) CPU E3-1230 v6 @ 3.50GHz

- 32 GB RAM

Software specifications:

- Docker 20.10.7

- Apex performance tests latest (d69a0d72)

- Cyclone DDS 0.9.0b1

- Fast DDS v2.8.0

Experiments configuration:

- Duration: 30 s

- 1 publisher and 1 subscriber

- Reliable, volatile, keep last 16 comms

- Publication rate of 100 Hz

- Both same and different processes

Further information

For any questions about the methodology used to conduct the experiments, the specifics on how to configure Fast DDS or any other question you may have, please do not hesitate in contacting